About

𝕊tefano ℙeluchetti

I am research scientist at Sakana AI. Previously, I was principal research scientist at Cogent Labs, and senior quantitative analyst and data scientist at HSBC. I obtained an MSc in Economics and a PhD in statistics from Bocconi University, the latter under the supervision of Gareth O Roberts.

My research focuses on deep learning and statistics, with interests including:

- interplay between neural networks and stochastic processes;

- measure transport via diffusion processes;

- probabilistic models and scalable inference.

Research

Featured Topics

Bridge Matching for Generative Applications and Beyond

⟡ In [Pel21] I introduce bridge matching (BM), a novel method for constructing a diffusion process which transports samples between two target distributions. The approach involves: (i) mixing a diffusion bridge over its endpoints with respect to the target distributions and; (ii) matching the marginal distributions of the process of step (i) through a diffusion process. Unlike denoising diffusions ([HJA20], [SSK⁺21]), BM can bridge two arbitrary distributions without being restricted to a simple distribution at one end.

My contribution precedes several others that rely on similar constructions such as [LGL22], [LVH⁺23], [LCB⁺23] and [TMH⁺23]. [SDC⁺23] shows in detail how these and additional proposals are equivalent to specific instances of BM. [Pel21] explores different diffusion dynamics including a Brownian motion scaled by σ, which corresponds to the I²SB proposal of [LVH⁺23], and yields the rectified flow of [LGL22] for σ=0. State-of-the-art image generation models such as Stable Diffusion 3 and FLUX.1 successfully employ this method.

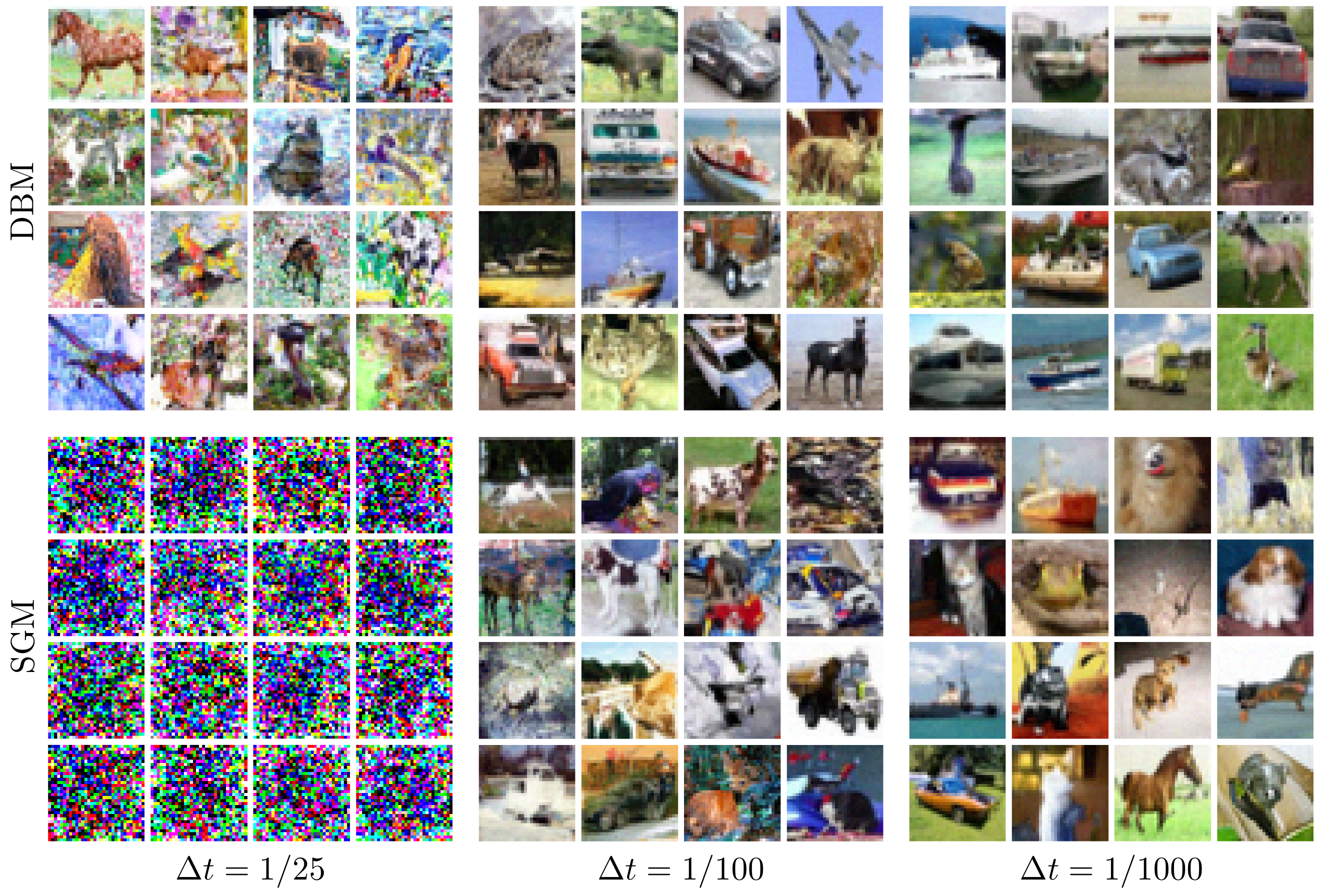

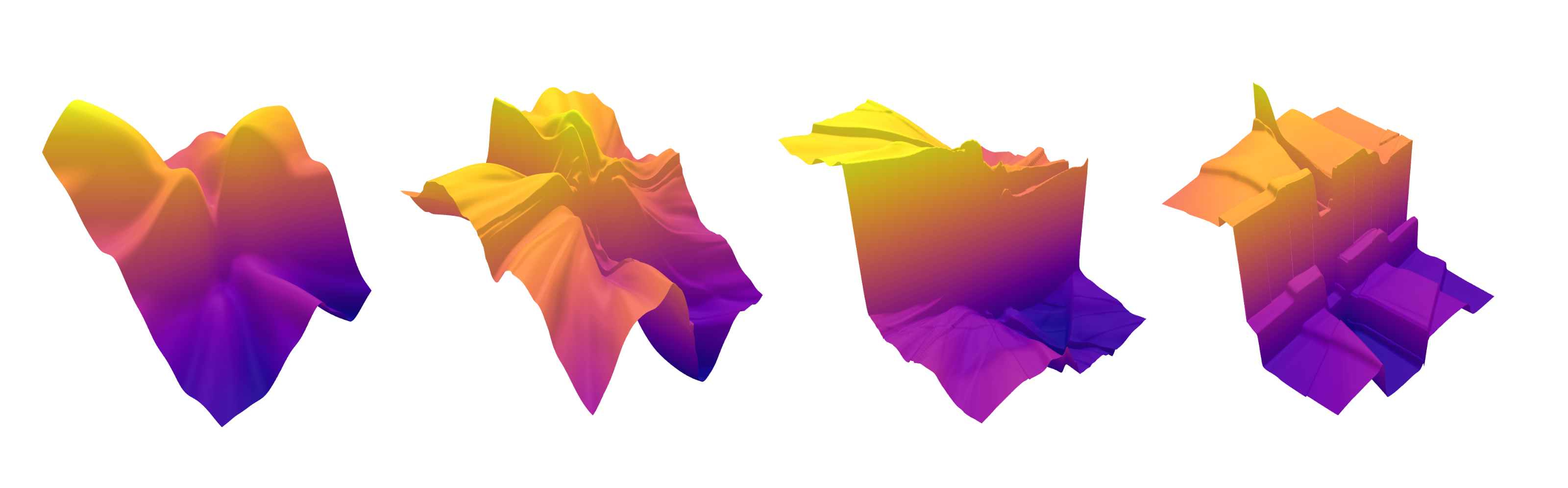

⟡ In [Pel23] I further study BM and introduce its iterative version, iterated bridge matching (I-BM, see also the concurrent work of [SDC⁺23]), which is shown to convergence toward a Schrödinger bridge / entropic optimal transport map. Contrary to the classical procedure employed in this setting, the iterative proportional fitting / Sinkhorn algorithm, I-BM achieves a valid bridge (coupling) between the target distribution at each iteration. Furthermore, I-BM exhibits improved robustness properties, which are especially relevant when approximating an optimal transport map. The theoretical findings are complemented by numerical experiments demonstrating the efficacy of BM. In generative applications, BM achieves accelerated training and superior sample quality at wider discretization intervals, as illustrated in the figure above.

⟡ In [Pel24] I introduce coupled bridge matching (BM², concurrently with [BKM⁺24]), a novel approach for learning Schrödinger bridges. Unlike the classical iterative procedures discussed previously, BM² offers an iteration-less methodology. The approach defines both forward and backward SDEs, initialized at the target distributions of interest. Each SDE performs bridge matching based on the other SDE's initial-terminal distribution. This formulation yields a straightforward regression loss function that can be efficiently optimized using standard stochastic gradient descent.

Large Neural Networks' Limits

⟡ The seminal work by [Nea96] establishes a connection between infinitely wide neural networks (NN) and Gaussian processes (GP), assuming parameters that are identically and independently distributed (iid) with finite variance. This result has significant implications, such as facilitating the analysis of the trainability of very deep NNs and the design of efficient covariance kernels for GP inference in perceptual domains. In a series of joint works with Stefano Favaro and Sandra Fortini ([PFF20]-[FFP23a]-[FFP23b]), I investigate wide NN limits arising from iid parameters following heavy tailed α-stable distributions, demonstrating convergence toward α-stable processes. The convergence findings, applicable to the prior NN model or the NN at initialization, are complemented by an analysis of the training dynamics in the neural tangent kernel (NTK) regime.

⟡ Jointly with Stefano Favaro, in [PF20] I initiate the study of stochastic processes resulting from infinitely deep neural networks. To obtain non-degenerate limits, the NN must be a residual NN with identity mapping ([HZR⁺16]). Under suitable conditions, as depth grows unbounded, convergence towards diffusion processes is achieved, assuming iid parameters with a Gaussian distribution. These findings are expanded in [PF21] to include the convolutional setting, the doubly infinite limit in depth and width, and supplementary results regarding the training dynamics in the NTK regime.

Probabilistic Models and Scalable Inference

⟡ Inference in wide Bayesian neural networks presents significant computational challenges. Particularly, random-walk Markov Chain Monte Carlo (MCMC) methods suffers from vanishing efficiency in high-dimensional parameter spaces. New states are proposed by adding perturbations to the current state, and avoiding a vanishing acceptance rates requires scaling these perturbation magnitudes inversely with dimensionality. Conversely, employing unadjusted MCMC algorithms (without acceptance steps) introduces unquantified approximation errors. In [PFP25] we demonstrate that function-space MCMC algorithms applied to wide Bayesian neural networks maintain provable efficiency in high-dimensional regimes: as network width increases unbounded, the acceptance rate converges to 1 even with fixed-size perturbations in the proposal mechanism.

⟡ The count-min sketch (CMS, [CM05]) is a randomized data structure designed to estimate token frequencies in large data streams by using a compressed representation through random hashing. A probabilistic augmentation of the CMS is introduced in [CMA18], where, under a Dirichlet process prior on the data stream, posterior token frequencies are obtained conditionally on the random hashes' counts. However, text corpora exhibit long tails in token count frequencies, which are not supported by a Dirichlet process prior. To address this shortcoming, we propose in [DFP21] and [DFP23] more suitable Bayesian non-parametric priors for the data stream, featuring a more flexible tail behavior. This approach presents significant challenges, necessitating the introduction of both novel theoretical arguments and scalable inferential procedures. In addition to providing uncertainty estimates, our Bayesian approach exhibits improved performance in estimating low-frequency tokens.

Bibliography

2025 —

2024 —

2023 —

2022 —

2021 —

2020 —

2019 —

2013 —

2012 —

† : alphabetically ordered — equal contribution.

Dev

SciLua

SciLua is a scientific computing framework designed for LuaJIT, focusing on a curated set of widely applicable numerical algorithms. It includes linear algebra (with optional language syntax extensions), automatic differentiation, random variate and low-discrepancy sequence generation modules. The framework also incorporates an implementation of the No-U-Turn Sampler (NUTS) from [HG14], a robust gradient-based MCMC algorithm, as well as a slightly improved version of the Self-adaptive Differential Evolution (SaDE) algorithm by [QS05], a global stochastic optimizer. All components have been meticulously optimized for peak performance. SciLua(LuaJIT) ranks first in the Julia Micro-Benchmarks relatively to the reference C implementation, with approximately a 9% performance gap.

-- for python quants 2015 presentation

ULua

ULua is a cross-operating-system, cross-architecture, binary LuaJIT distribution. Featuring its own package manager and package specifications, ULua enables bundling dynamic libraries and endorses semantic versioning for simplified dependency resolution. A build system, utilizing multiple VMs, automatically generates binary packages from LuaRocks. Moreover, ULua is fully self-contained and relocatable, even across operating systems.

All my projects are hosted at GitHub.